Particle Flow

Introduction

Particle flow is a new interpolation tool for time-varying scientific simulation data. Given the starting and ending frames of a time range, the tool can reconstruct any intermediate frame between them using the starting and ending frames, as well as the resulting particle flow. The tool does not reply on feature description and comparison as in traditional optical flow based methods, and can be adapted to complex transformations of features across data frames. Therefore, it is suitable for capturing and retaining feature dynamics in scientific datasets, and faciliate scientists to effectively compress the original large dataset.

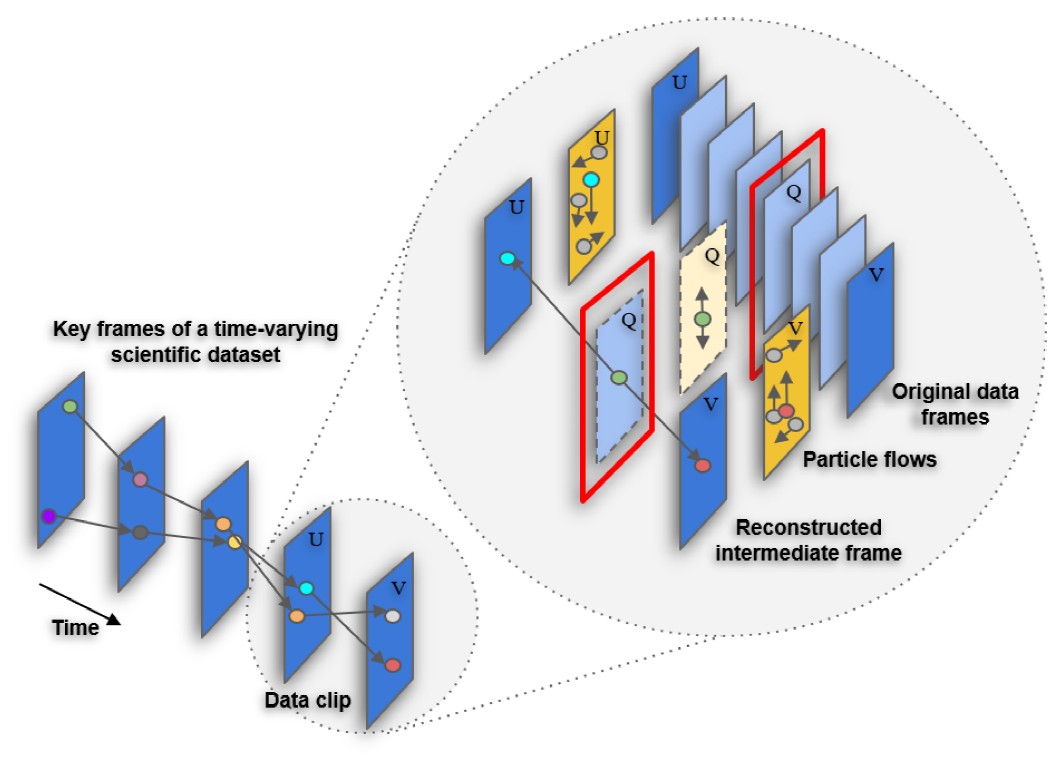

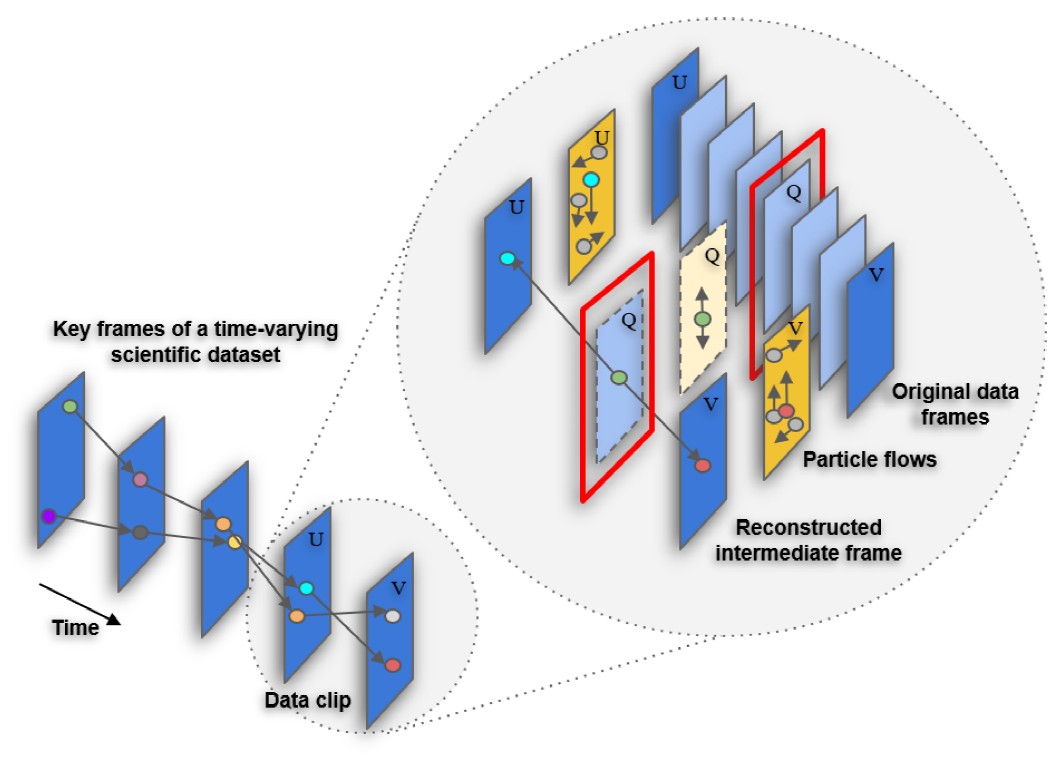

The above figure shows the framework of particle flow. Planes U and V represent the starting and ending frames of a data clip of a time-varying scientific dataset. We aim to learn the particle flows (i.e., a modified version of optical flows) for both Planes U and V (in dark yellow). The particle flow for an intermediate frame Q (in light yellow) is estimated based on the flows on Planes U and V. Then, the values on Plane Q (in light blue with dashed line) are estimated as a linear interpolation of the corresponding values in Planes U and V. Finally, we compare the similarity of the reconstructed values and original ones on Plane Q (in red windows). The learned particle flows are subject to minimizing the similarity measurement.

The above figure shows the framework of particle flow. Planes U and V represent the starting and ending frames of a data clip of a time-varying scientific dataset. We aim to learn the particle flows (i.e., a modified version of optical flows) for both Planes U and V (in dark yellow). The particle flow for an intermediate frame Q (in light yellow) is estimated based on the flows on Planes U and V. Then, the values on Plane Q (in light blue with dashed line) are estimated as a linear interpolation of the corresponding values in Planes U and V. Finally, we compare the similarity of the reconstructed values and original ones on Plane Q (in red windows). The learned particle flows are subject to minimizing the similarity measurement.

Source code

Here is the source code, particle_flow_src.zip, to generate particle flow for a time-varying simulation dataset.

Dataset

The tool supports regular volume data generated from simulations. The following datasets are examples:

- 2D slices of a vortex dataset: 2d_vorts.zip. Each time step contains two files: one head file and one data file. The head file indicates the number of grid points along each axis (x, y, z), and the data type (such as byte, int, float, etc.). The data file contains the 3D or 2D (i.e., z = 1) array of the volume in the C row-major order.

- Isabel Hurricane simulation data: the description and the download link of the dataset can be found here.

Dependencies

The tool requires imageio, scipy, tensorflow, argparse, numpy, and matplotlib.

Running

The code could be run as following steps:

- open terminal;

- run

python train_particle_flow.py --input_volumes_path path, wherepathis the parameter specifying the input path of time steps of a simulation dataset.

Publication

A Scientific Data Representation Through Particle Flow Based Linear Interpolation

Yu Pan, Feiyu Zhu, Hongfeng Yu.

Proceedings of 2019 IEEE Fifth International Conference on Big Data Computing Service and Applications (BigDataService), San Francisco, CA, April 4-9, 2019.

DOI: 10.1109/BigDataService.2019.00010 [PAPER]

DOI: 10.1109/BigDataService.2019.00010 [PAPER]

Citation

@inproceedings{pan2019scientific,

title={A Scientific Data Representation Through Particle Flow Based Linear Interpolation},

author={Pan, Yu and Zhu, Feiyu and Yu, Hongfeng},

booktitle={2019 IEEE Fifth International Conference on Big Data Computing Service and

Applications (BigDataService)},

pages={19--28},

year={2019},

organization={IEEE}

}

Contact